Introduction

AI agents are becoming increasingly common, with demonstrations, videos, and social media posts showcasing systems that can reason, act, and automate complex tasks. However, when developers attempt to replicate these examples locally, they often encounter familiar challenges: fragile setups, excessive access privileges, unclear boundaries, and environments that only function on a single machine.

This article presents a practical and responsible approach to building AI agents using Docker Compose. It emphasizes tool-driven architectures that prioritize reproducibility, security, and community engagement.

What an AI agent really is (no hype)

Before touching Docker, let’s clarify the fundamentals.

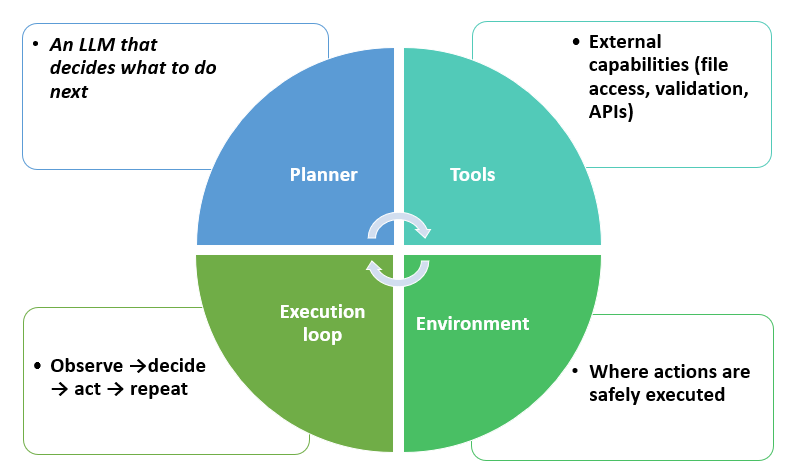

An AI agent is not just a prompt. It is a system composed of:

Most failures happen because these concerns are collapsed into a single script.

Why Docker Compose is a great fit for agents

Docker Compose gives us exactly what agent systems need:

- Reproducible environments

- Clear service boundaries

- Network isolation

- Explicit configuration

- Easy onboarding for the community

Instead of giving an agent direct access to your laptop, we let it call tools exposed as services.

Why Docker Compose fits agent-based systems

Docker Compose presents a structured methodology for agent experimentation, offering several notable advantages:

- Clear service boundaries: Each service operates within its own defined limits, thereby minimizing conflicts.

- Reproducible environments: It facilitates the easy replication of configurations, ensuring consistent outcomes.

- Network isolation: Services can communicate securely without risk of interference from other services.

- Explicit configuration: Well-defined settings enhance the management and clarity of services.

- Facilitation of onboarding for newcomers: This framework allows new team members to rapidly comprehend and engage with the system.

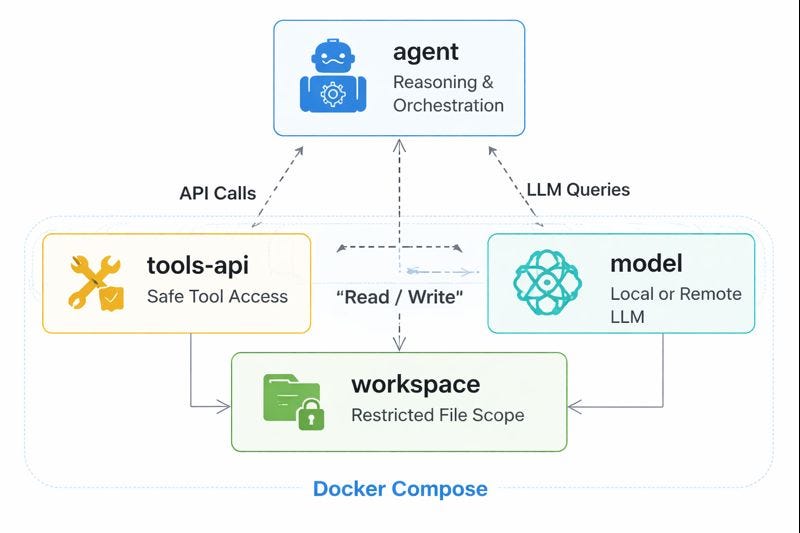

Rather than providing an agent with unrestricted access to the host machine, we offer tools as services, enabling the agent to interact with them through well-defined application programming interfaces (APIs).

This shift significantly improves both safety and clarity within the operational environment.

A simple agent architecture

Core services:

agent– reasoning and orchestrationtools-api– safe tool accessmodel– local or remote LLMworkspace– restricted file scope

Each service does one job, and Docker Compose wires them together.

Why this matters for the AI community

This approach aligns strongly with community values:

- Accessibility: anyone can run it with

docker compose up - Responsibility: no hidden permissions

- Transparency: architecture is explicit

- Reusability: patterns apply beyond demos

Compose does not replace cloud platforms or Kubernetes. It creates a trustworthy starting point.

From local experiments to real systems

Docker Compose is often the first step:

| Stage | Tool |

|---|---|

| Learning & demos | Docker Compose |

| Team collaboration | Shared Compose profiles |

| Automation | Headless Compose in CI |

| Production | Kubernetes or managed runtimes |

The architecture remains consistent; only the execution environment changes.

Final thought

AI agents should not feel magical or dangerous.

If you can explain your agent using a docker-compose.yml, you are already building something real.